Tech Vision is Localogy’s series that spotlights emerging tech. Running semi-weekly, it reports on new technologies that Localogy analysts track, including strategic implications for local commerce. See the full series here.

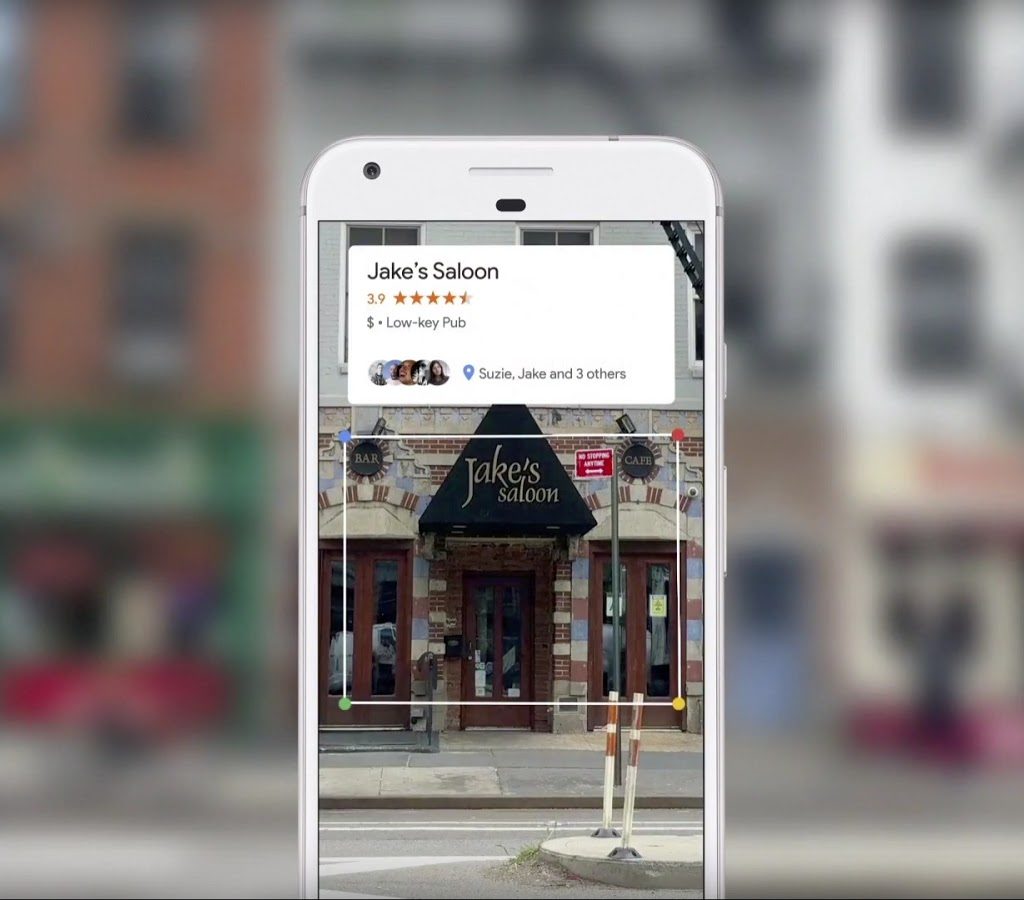

Visual search is one of many technologies Google is exploring to future proof its core search business and hedge potential directions. A visual front end — via Google Lens and Live View navigation — builds on mobile hardware, computer vision, looming 5G connectivity and gen-Z camera affinity.

As we’ve examined, there are lots of local commerce implications. Live View navigation is obviously very local. Google Lens — a.k.a. “search what you see” — meanwhile has potential utility for local discovery. Whether it’s style items or storefronts, it’s an intuitive way to get info on an object quickly.

The storefront example could shine in certain local discovery contexts, replacing or augmenting the way we currently conduct high-intent proximity searches on smartphones today. Google is putting it out there to see what sticks, and will likely formulate monetization accordingly and in native ways.

This week, a patent filing indicated another possible direction Google could take local-visual search. It has filed for intellectual property around virtual “graffiti” that users can create in ways that are then discoverable and consumable by others. Could this engender a sort of visual user-generated content?

According to Business Standard’s summary of the patent filing

The company in its patent specification filed with the Patent Office says that with virtual graffiti, if one may wish to leave a message for his or her friend to try a particular menu item at a restaurant, the message may be virtually written on the door of the restaurant, and left for the second user to view. When the friend visits the restaurant, he or she will receive an indication that virtual graffiti is available for their view. The message will then appear to them on the door of the restaurant when viewed with an augmented reality system.

Just as Google’s local listings and GMB strategy depend on user-generated content, could a similar paradigm apply in a visual search world? Could users’ digital markings left on physical places be a valuable content source for Google? If so, conditioning the behavior and seeding activity makes sense.

Cloud Anchors

As further background, this builds on other Google AR tech including its ARCore developer kit and cloud anchors. This stems from the AR cloud foundational principle of image persistence. That is, placing a virtual graphic in a real-world location and having it stay there (not as easy as it sounds).

This principle — and AR in general — are inherently very “local.” Not only can graphics or informational overlays add relevance to physical-world items, but their very placement can communicate location-based relevance. This hinges on image persistence technology with millimeter accuracy (enter 5G).

That gets back to the “graffiti” patent filing. It could signal some local-commerce relevant tools on the road map. Or, like many patent filings, it could be an experimental red herring. But meanwhile, it’s a valuable thought exercise to pull on that string. What use cases could this or other AR tools bring us?

It’s also worth noting that this is an IP filing in India, where there are obviously demand signals, user behavior and other factors that are unique to the region. Our minds go to some of the above applicability in western markets, but it could have an outcome that’s different entirely.

Finally, for further context, this aligns with one of our 2020 predictions for Google’s continued pursuit of a visual front-end. We’ll keep watching for evidence to validate the prediction… or hold ourselves to task.

5. Google Cultivates a More Visual Front End: To future-proof its core search business, Google will invest heavily in cultivating and marketing existing visual search products such as Google Lens (“search what you see”) and Live View AR navigation. For Google this is driven by reasons similar to embracing voice search: to diversify search formats, boost volume, etc.. It will start by pushing the use case around general interest searches (pets, flowers, etc.). But after it conditions the user behavior it will flip the monetization switch by focusing more on monetizable items. For example, visual search is natively aligned and highly conducive to fashion items and local discovery. It will jumpstart all of the above in 2020 by “incubating” the above products in core search to get it in front of users and stimulate demand for these new visual formats.